SoundSketch

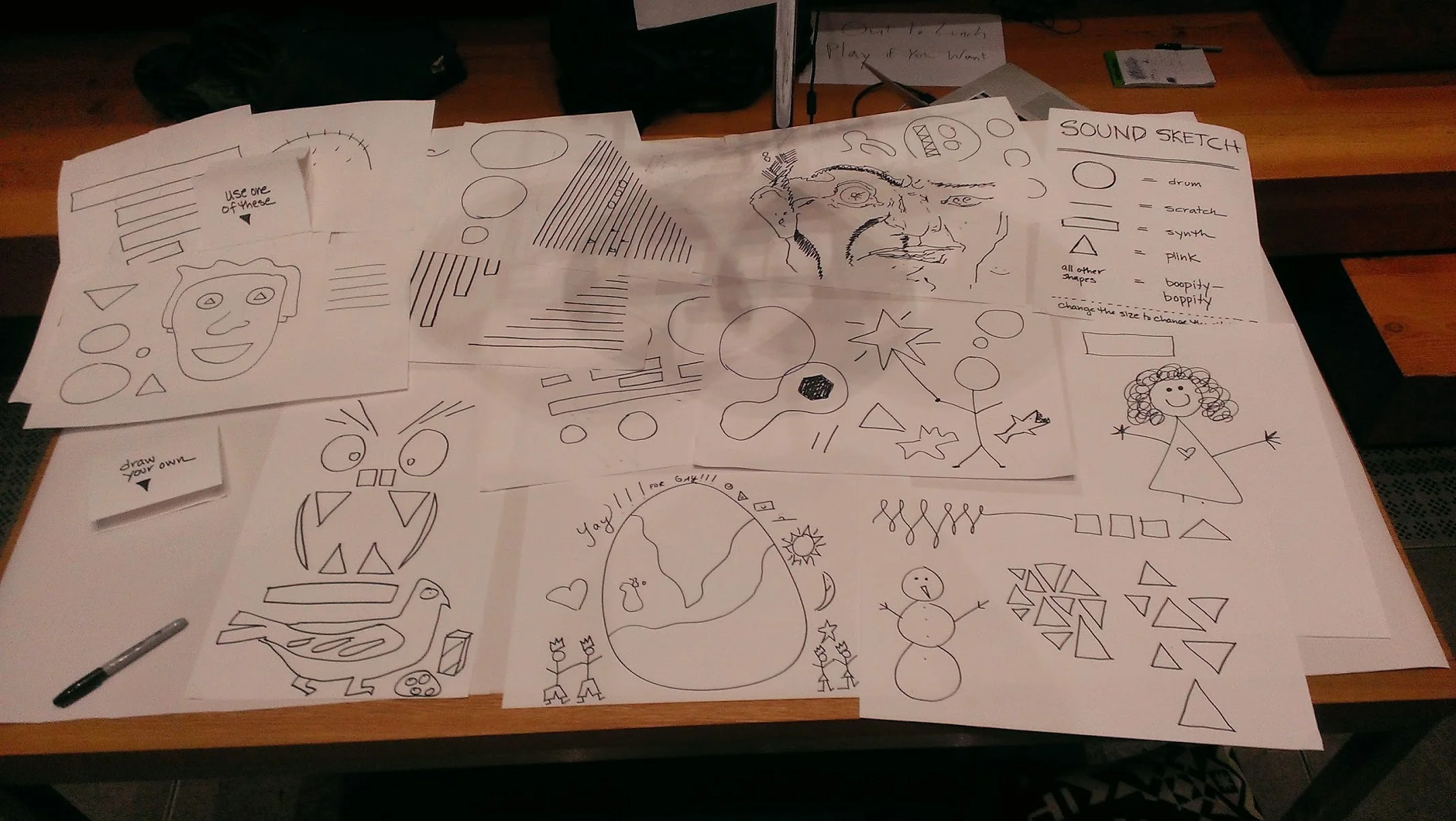

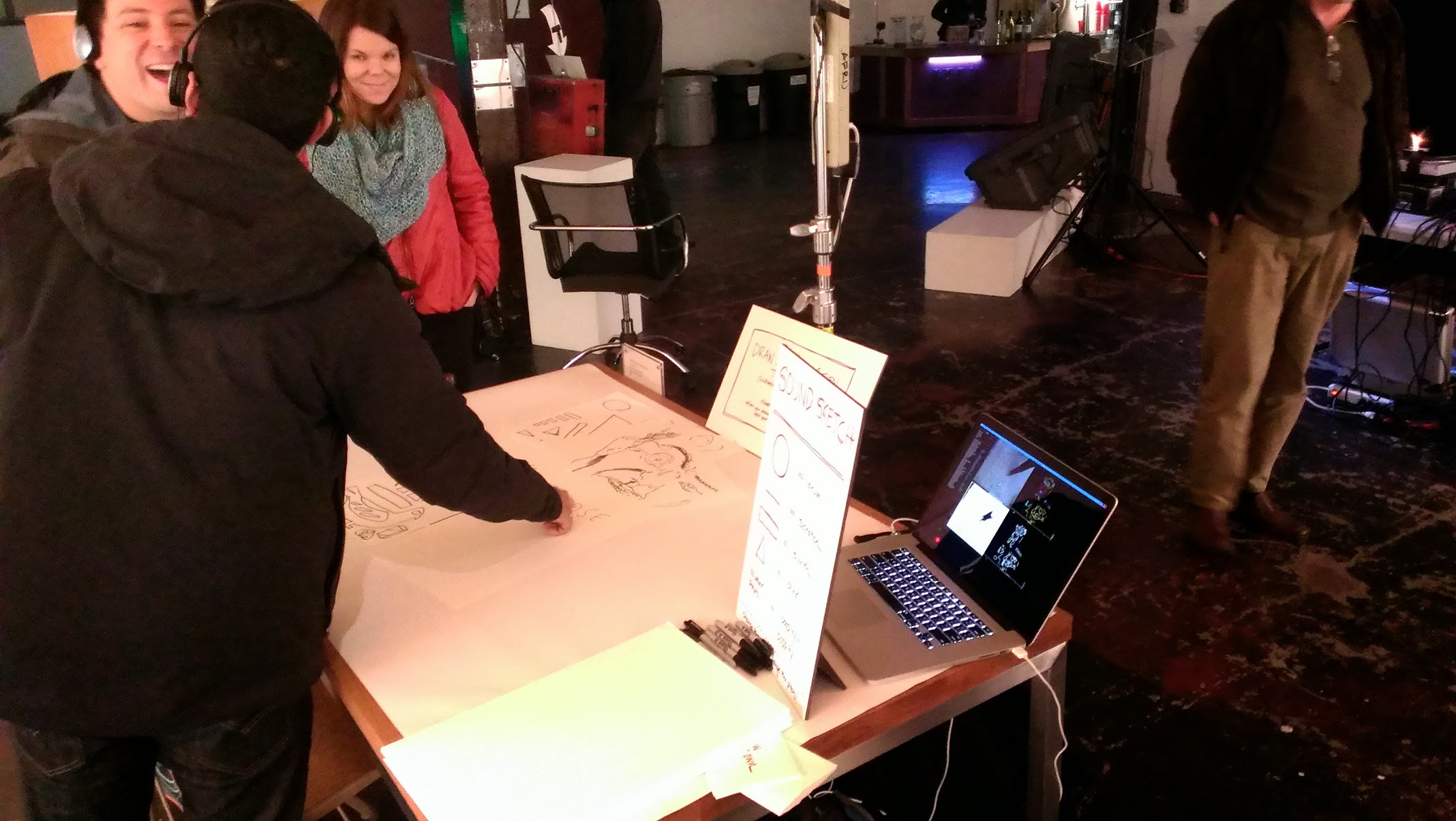

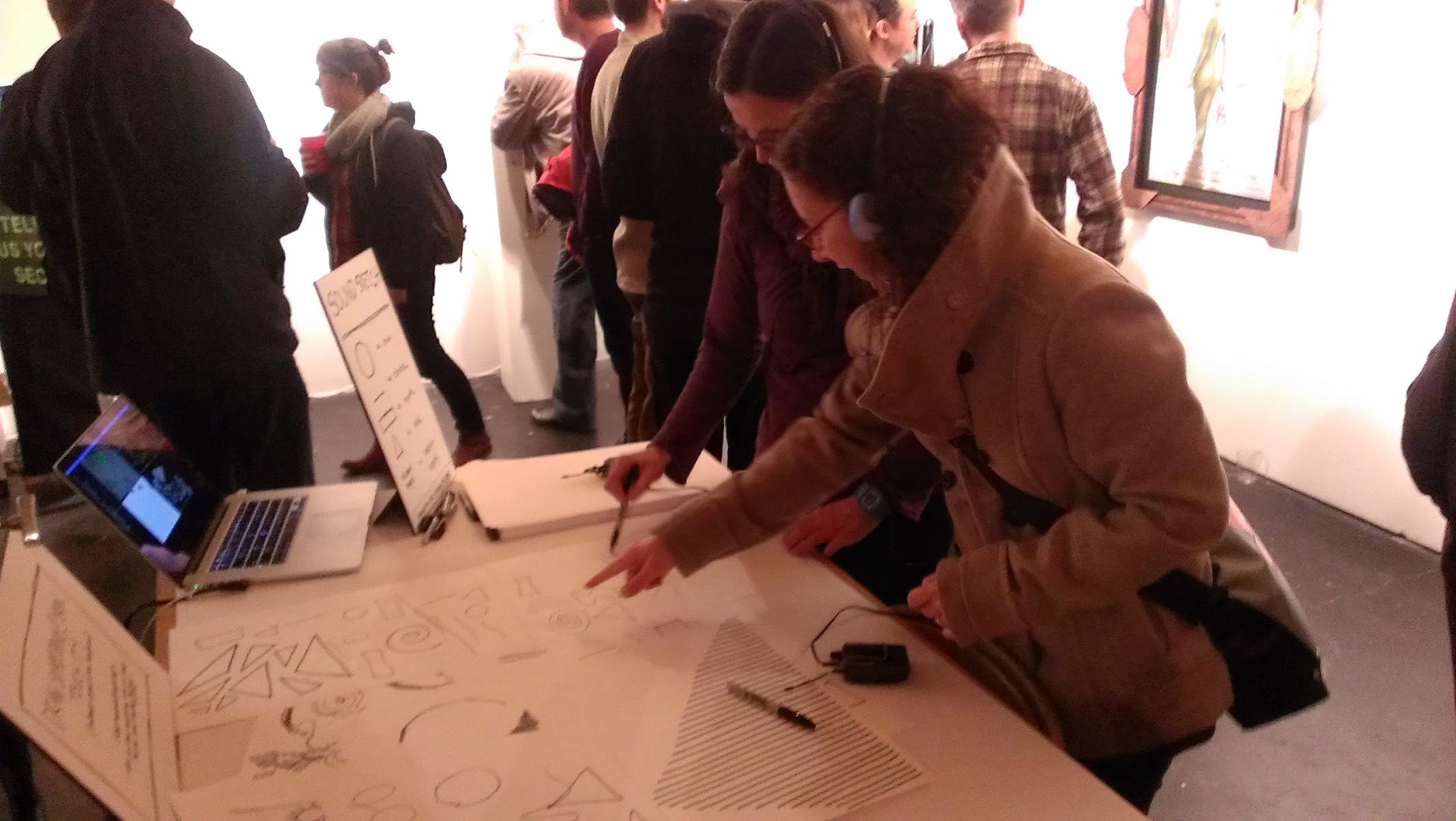

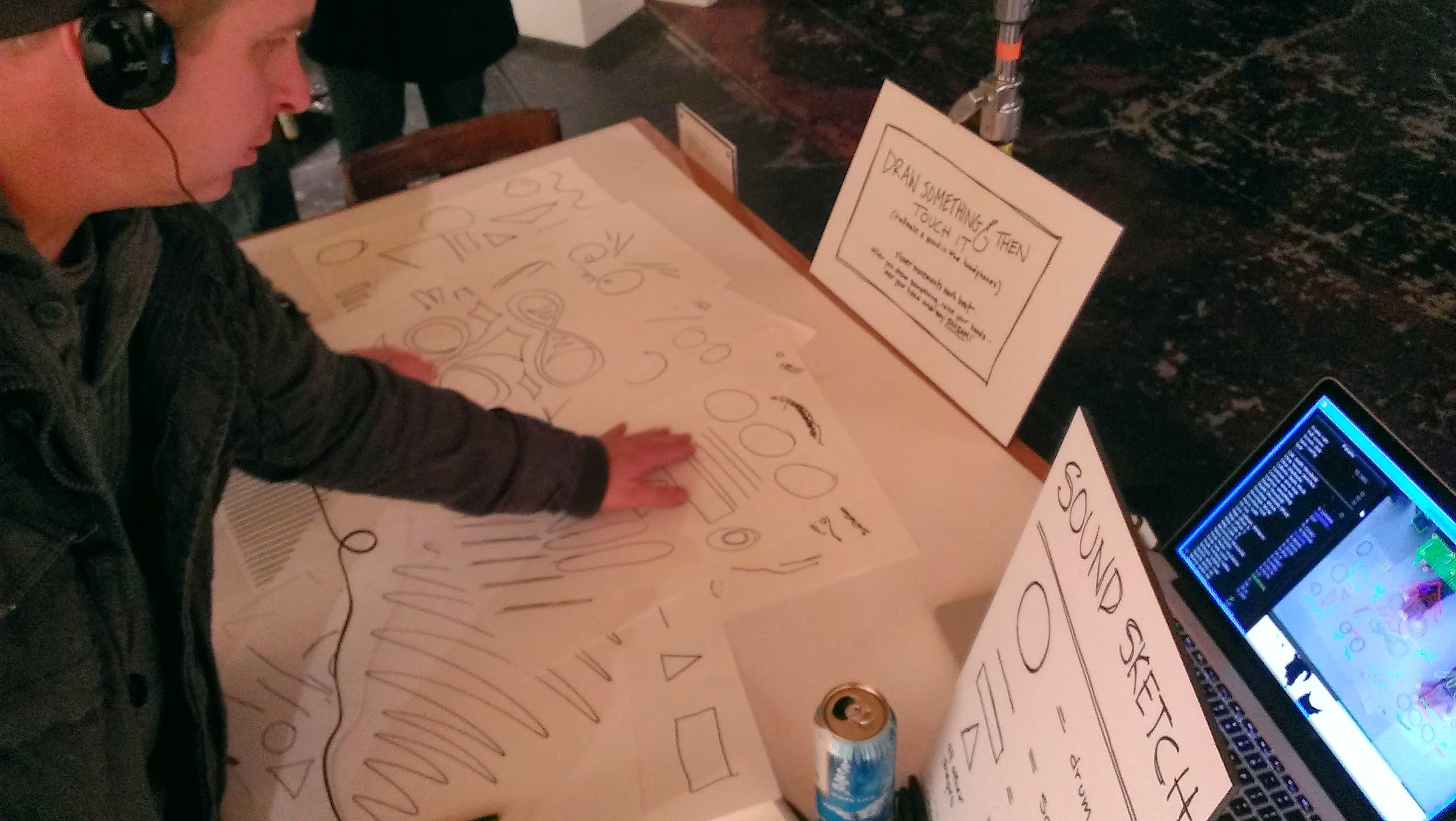

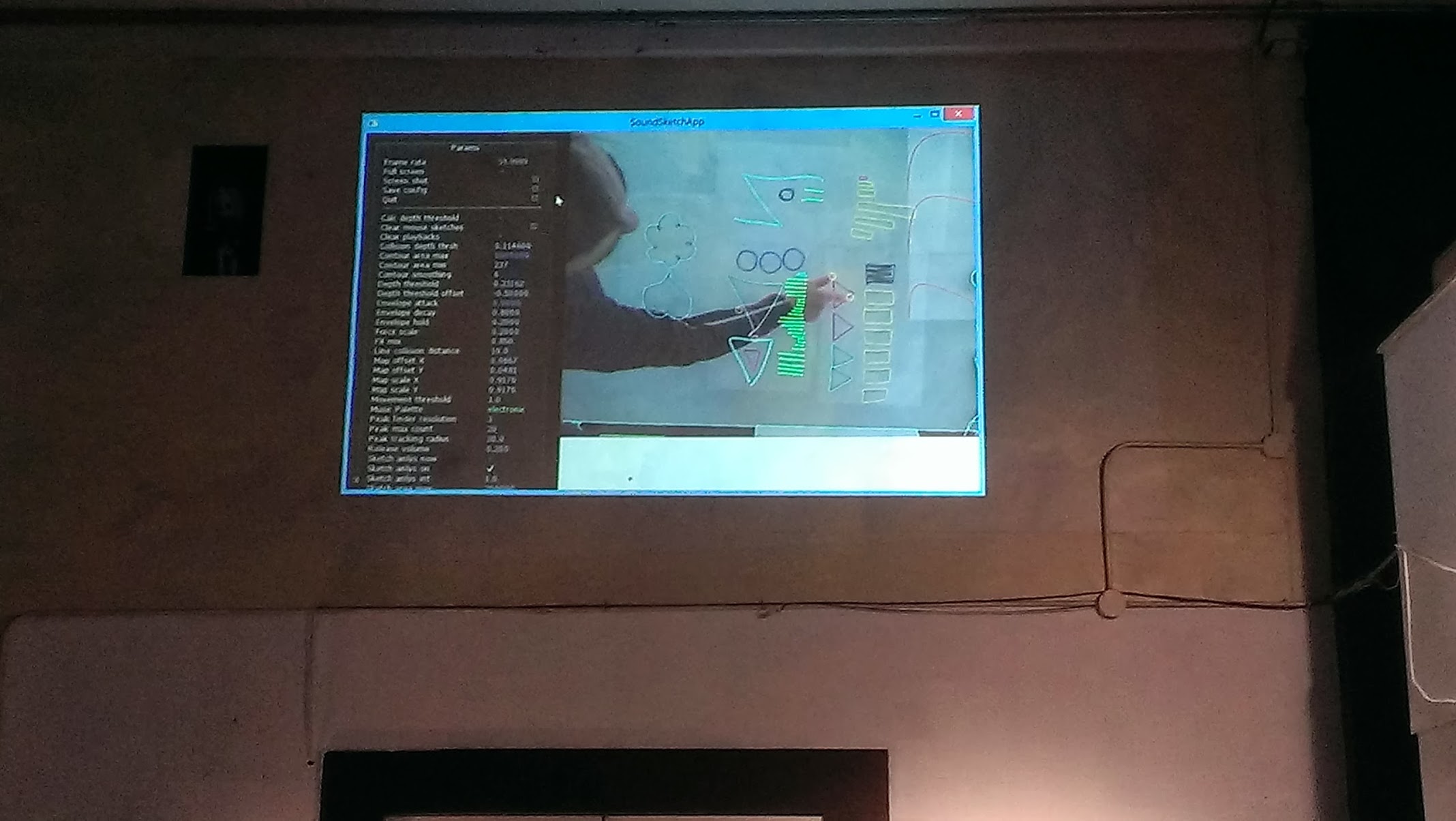

SoundSketch is a new kind of musical instrument and playground. You use pen and paper to draw instruments and then play them by touching them with your fingers or by arranging them in space.

It connects the physical world of ink, paper, and fingers to the digital world of electronically synthesized and controlled sound. And it connects the expressiveness of the visual world to the expressiveness of the audial world. Want to draw a drum set and play it? Want to build a monsters face and listen to it? Want to draw anything you can imagine and see what happens? Go ahead. Grab a marker, draw something, and use your fingers to tap, fiddle, strum, or whatever.

The PR video for the third version of SoundSketch on which I played the role of Concept Lead.

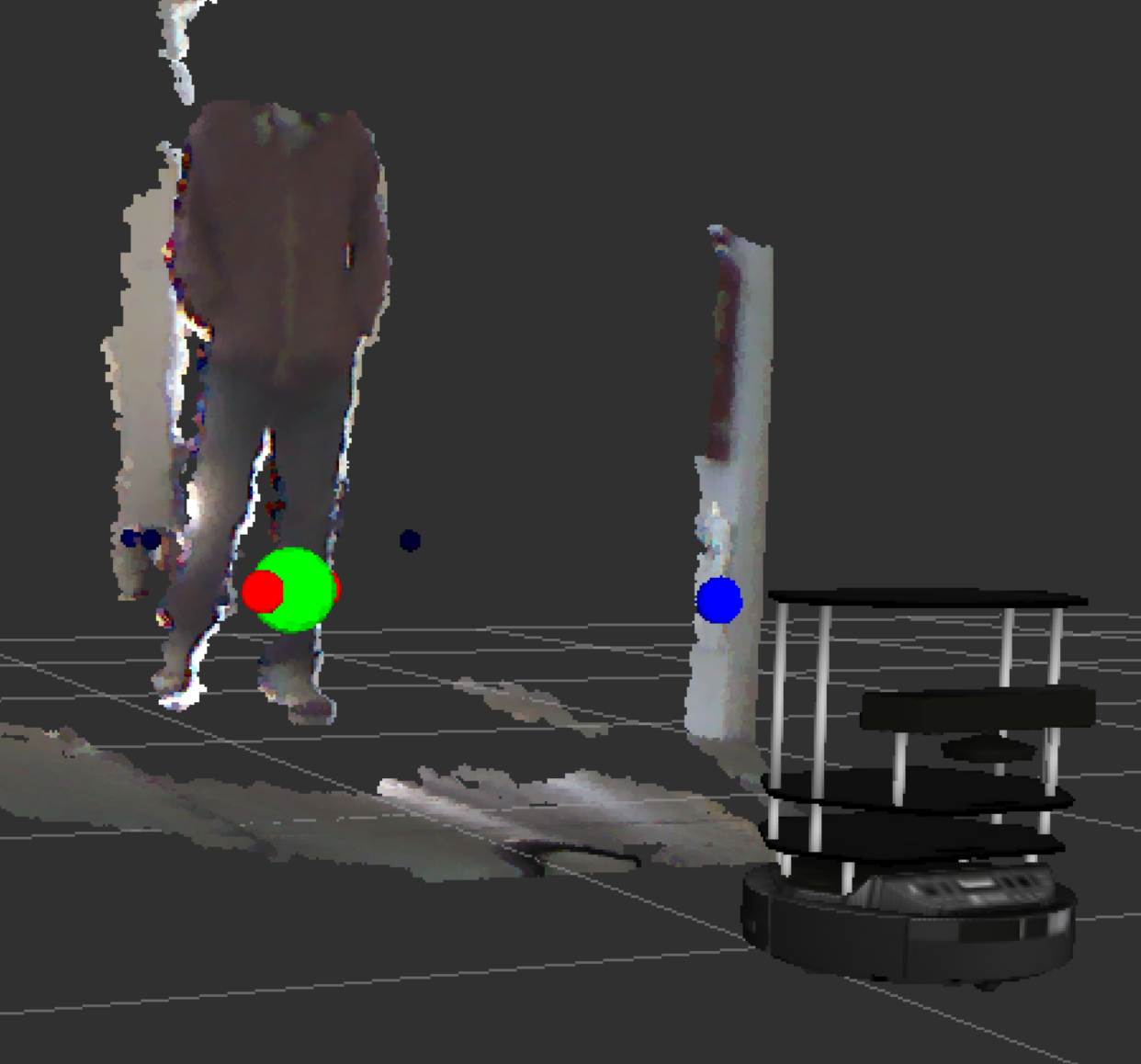

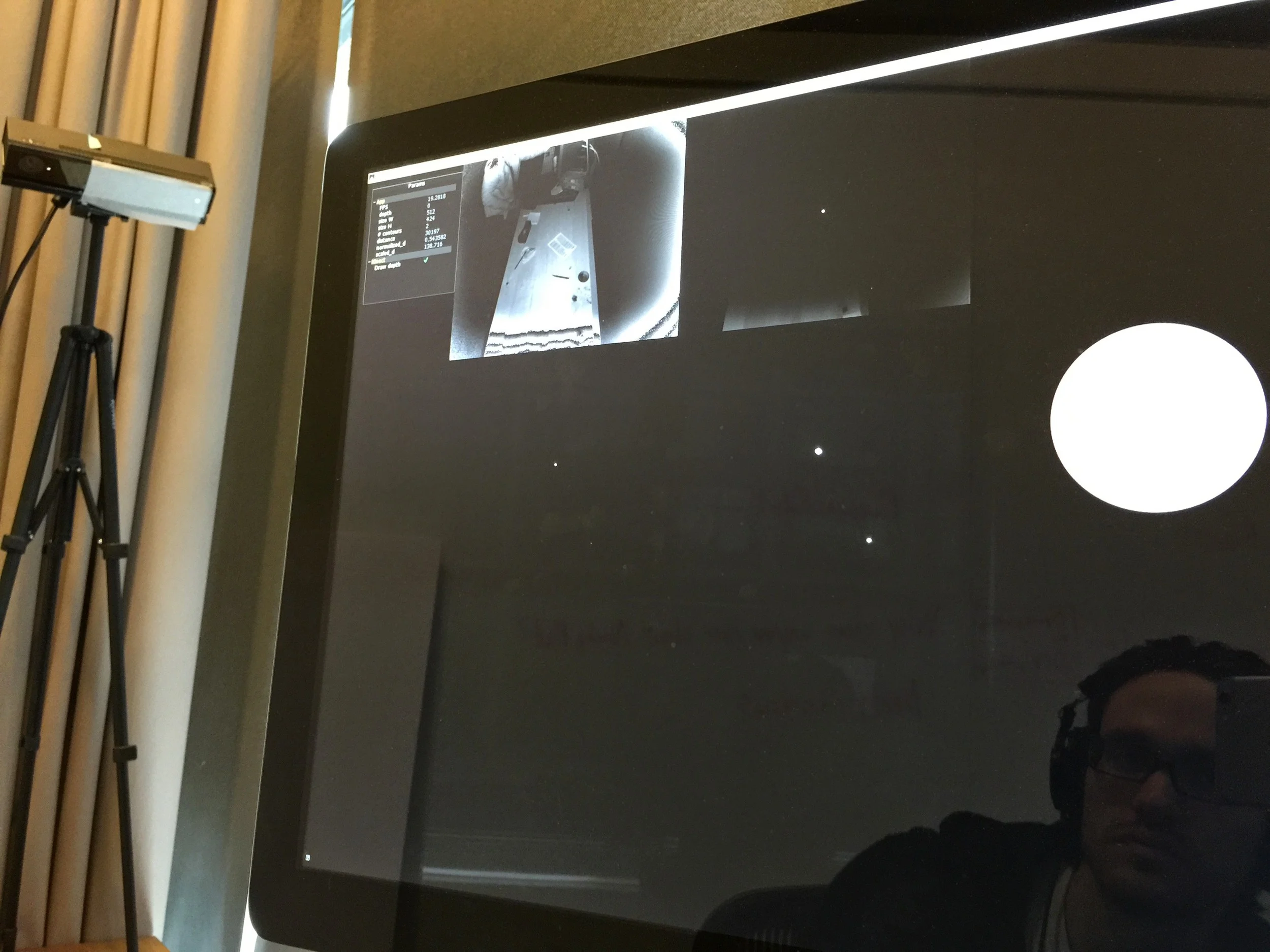

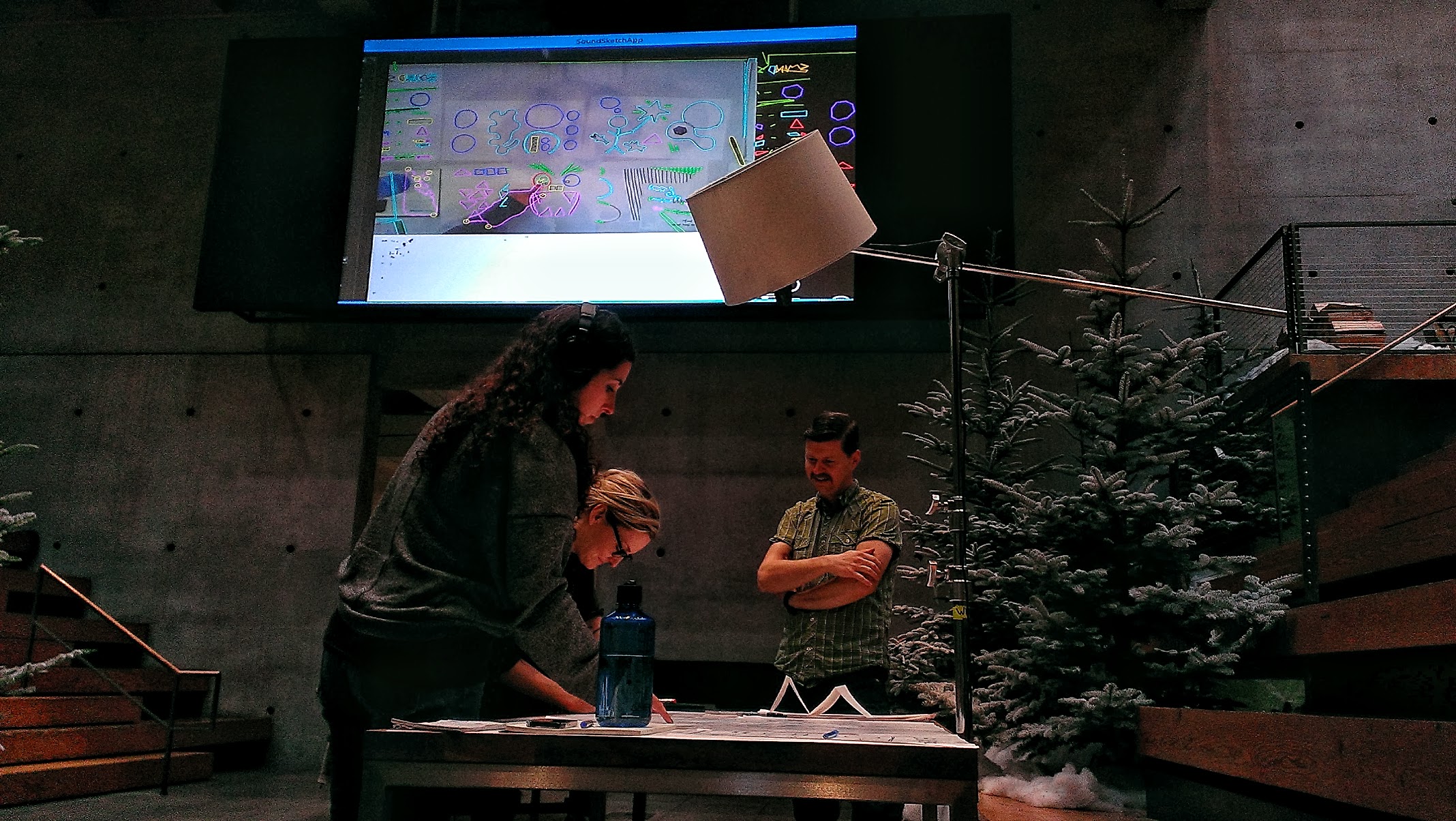

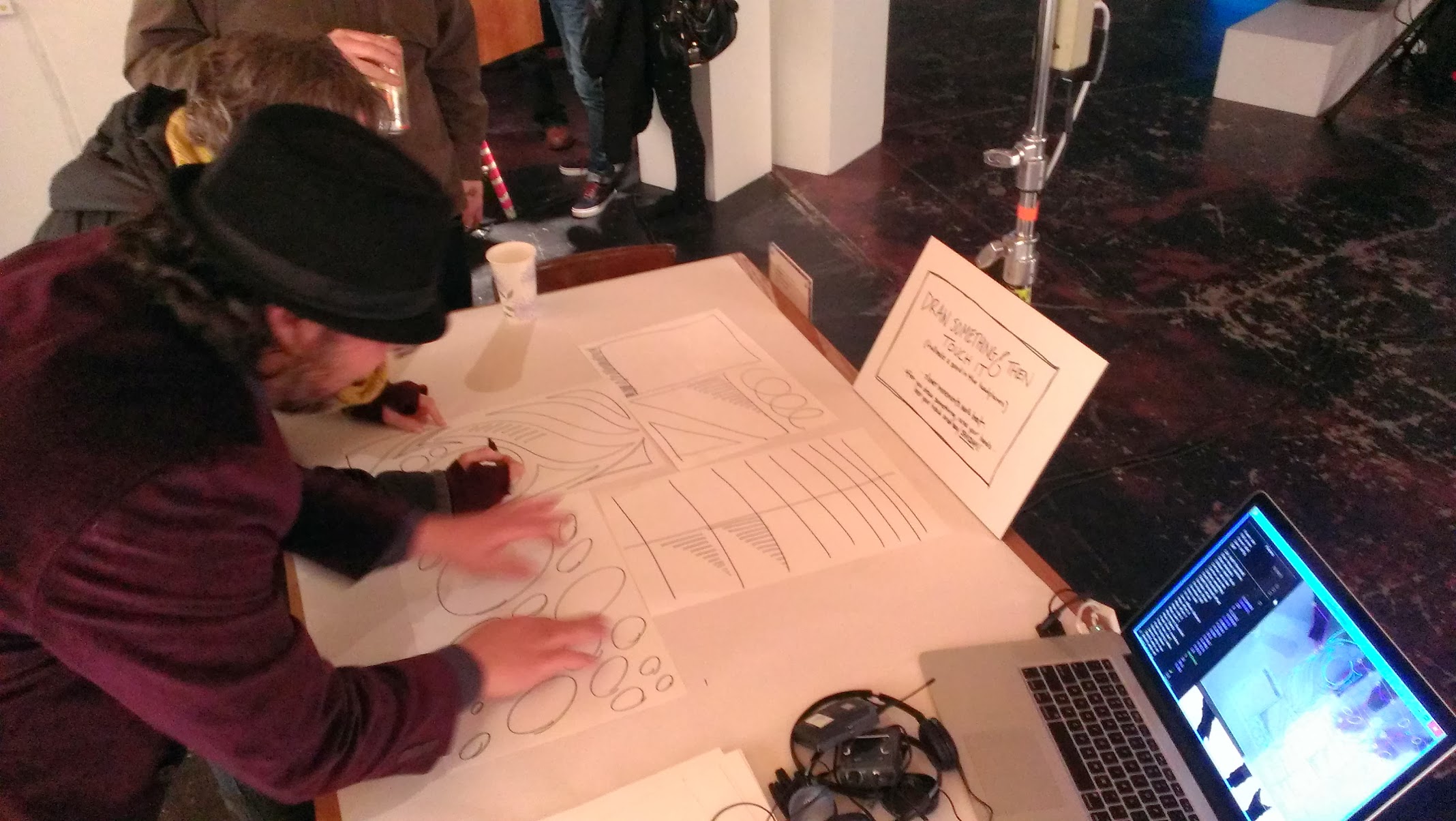

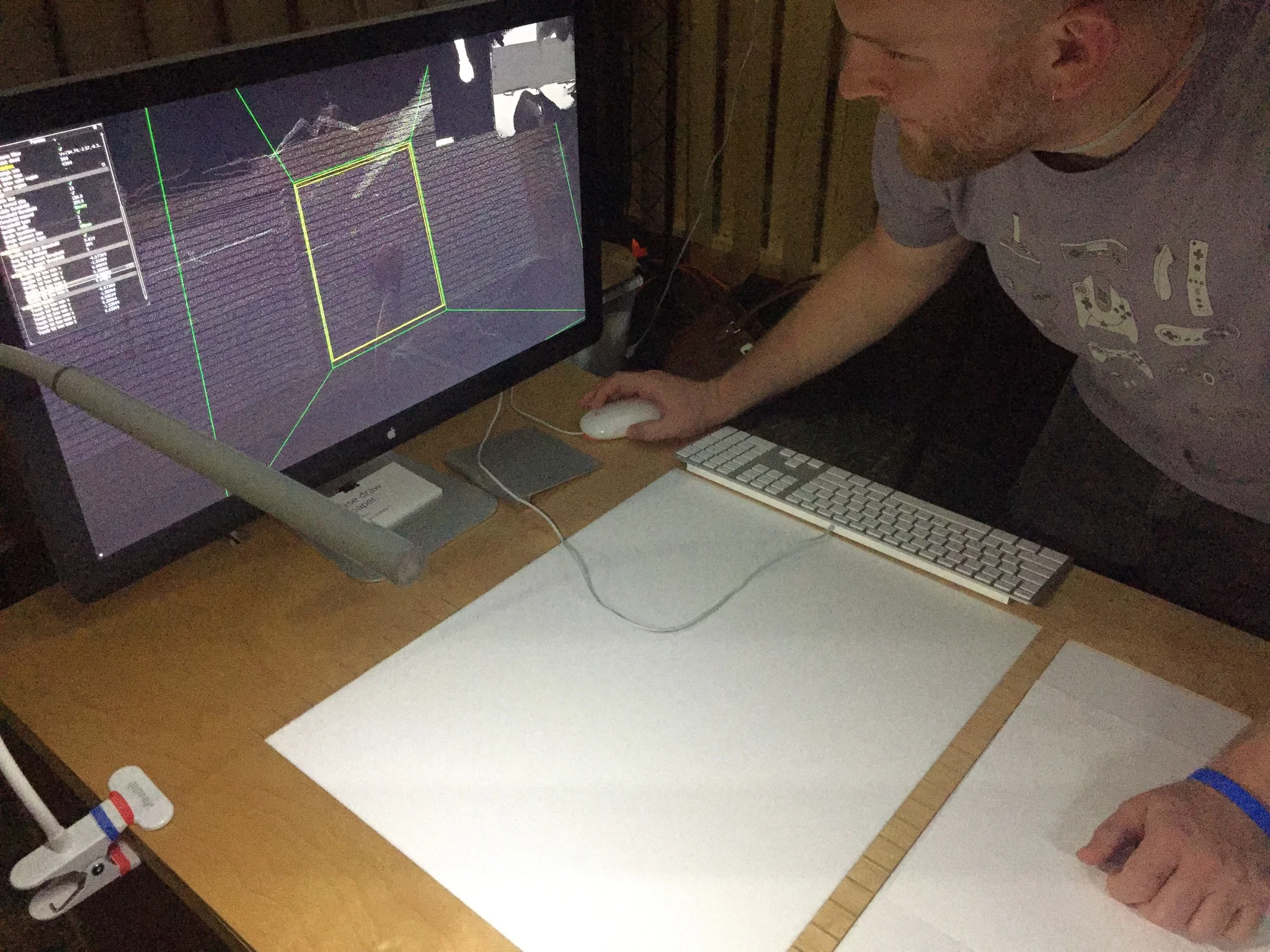

SoundSketch started in 2012 and I've worked on 2 newer versions since. I made the first version in school when I found a bit of random yarn and a discarded tripod in the corner of my research lab. I used them to hang my Kinect above a table.

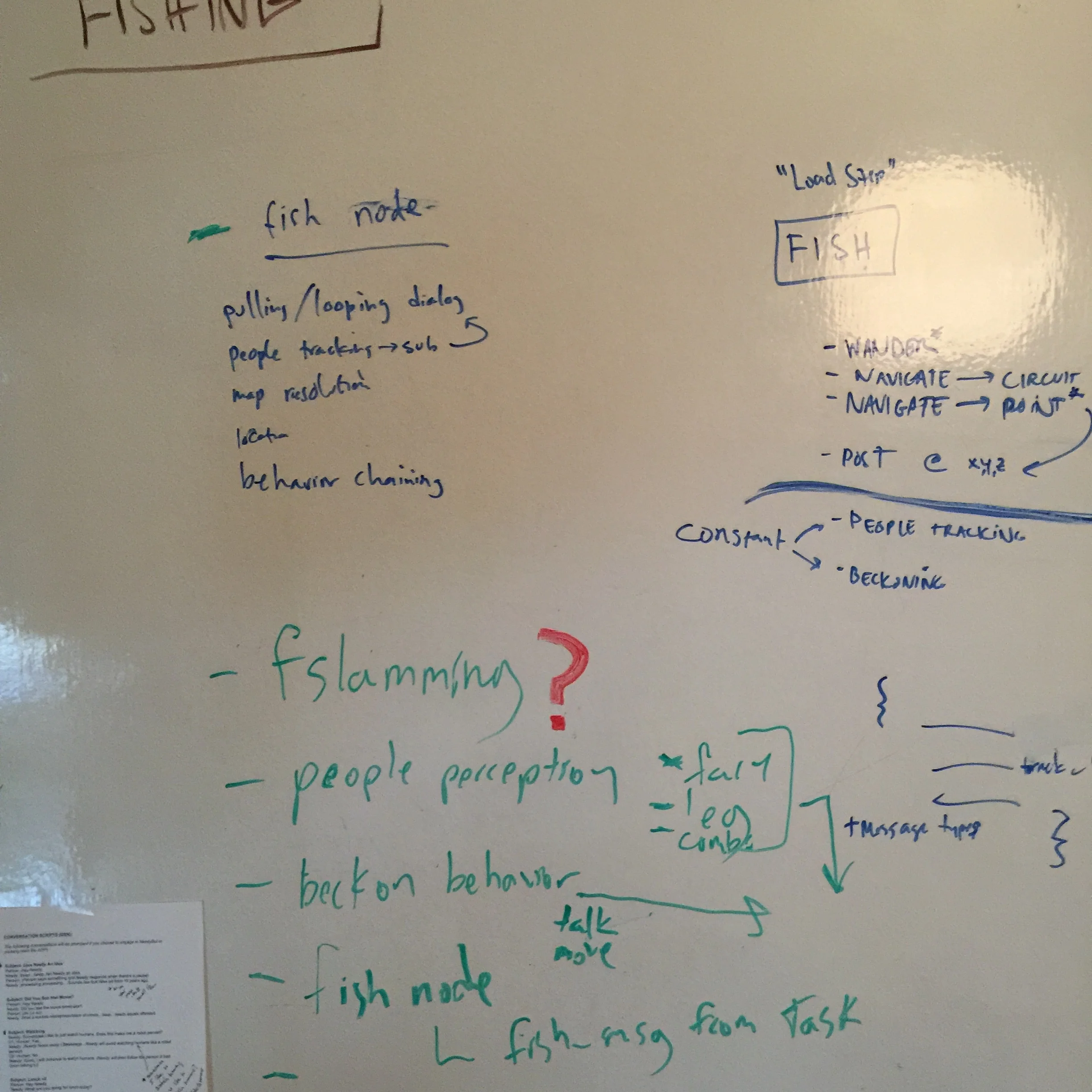

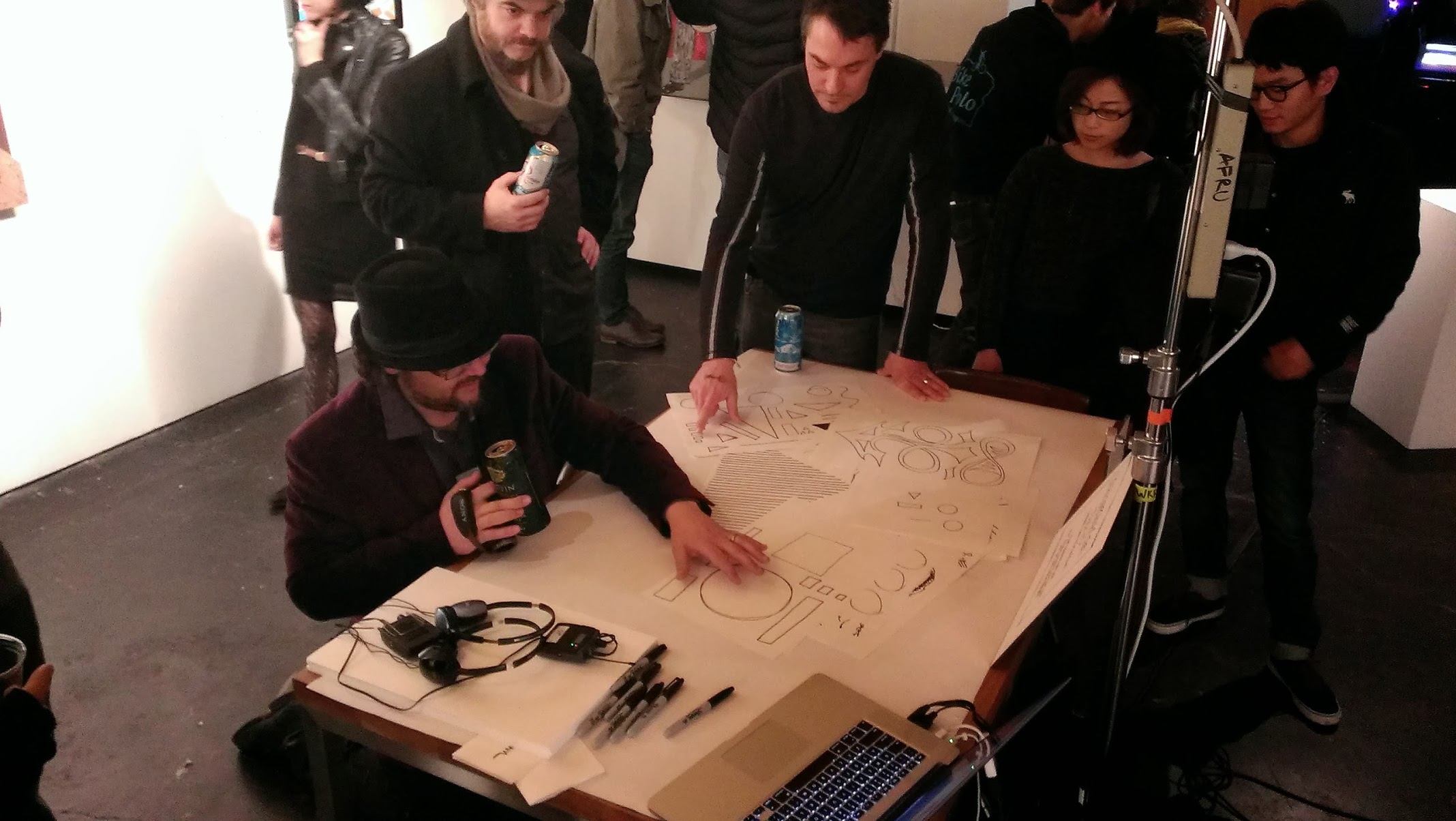

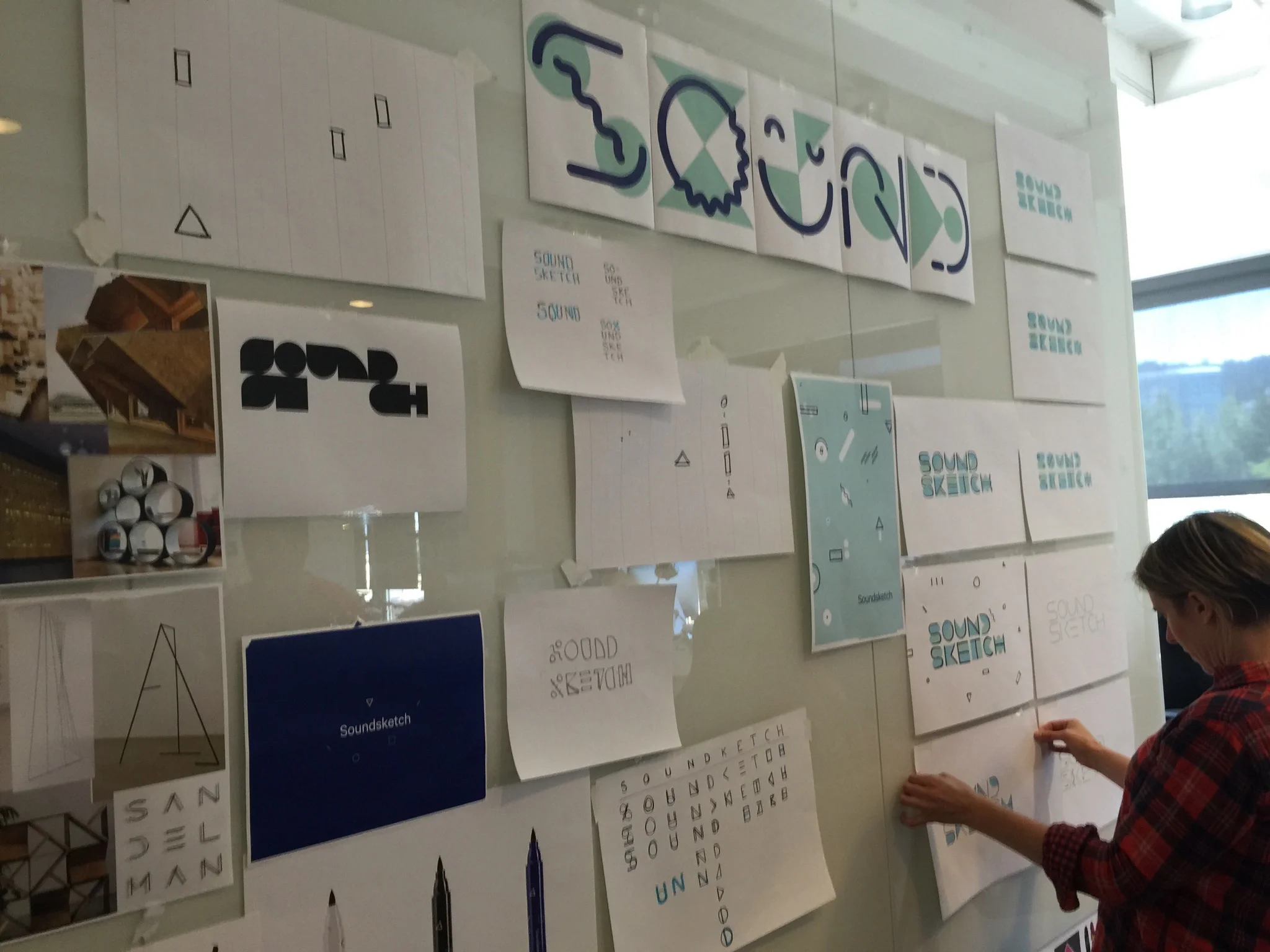

Though there are some evolutions across SoundSketch versions, especially in the amount of money spent on each, the interaction design, concept, and algorithms used are essentially the same across all three. I developed version 2 working with one other engineer during my first month at Wieden+Kennedy. And for version 3 I took the role of Concept Lead and worked with a team of 5 including 2 engineers, 2 designers, and a woodworker.

Me explaining my very first version of soundsketch. I created it for a User Interface Prototyping class at Georgia Tech. This video gets into more of the tech and interaction mechanic details and shows the system being interacted with live.

The idea came really just from me wanting to use my kinect for a novel user interface project. I love music and so I couldn't really think of anything else I'd rather make an interface for. My original and favorite idea was, instead of using pen and paper, to just use all of the objects in your environment as the musical interface. Perhaps you could use the cushions of your couch as drums. And the coasters on the coffee table as piano keys. And so on. Unfortunately, that's a really hard computer vision problem to solve and, at the time, out of my depth.

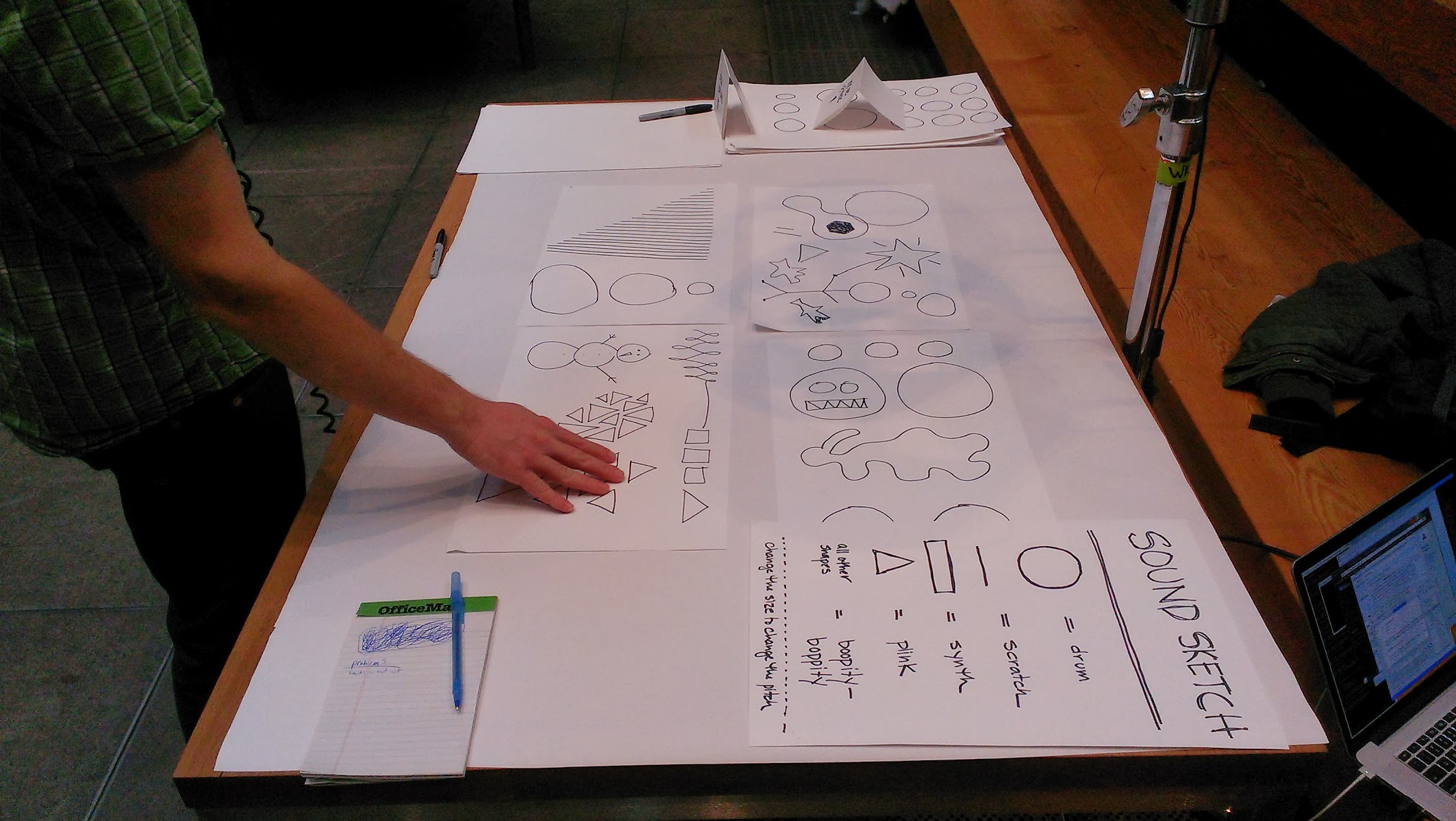

So, I arrived at drawing with black markers on white paper.

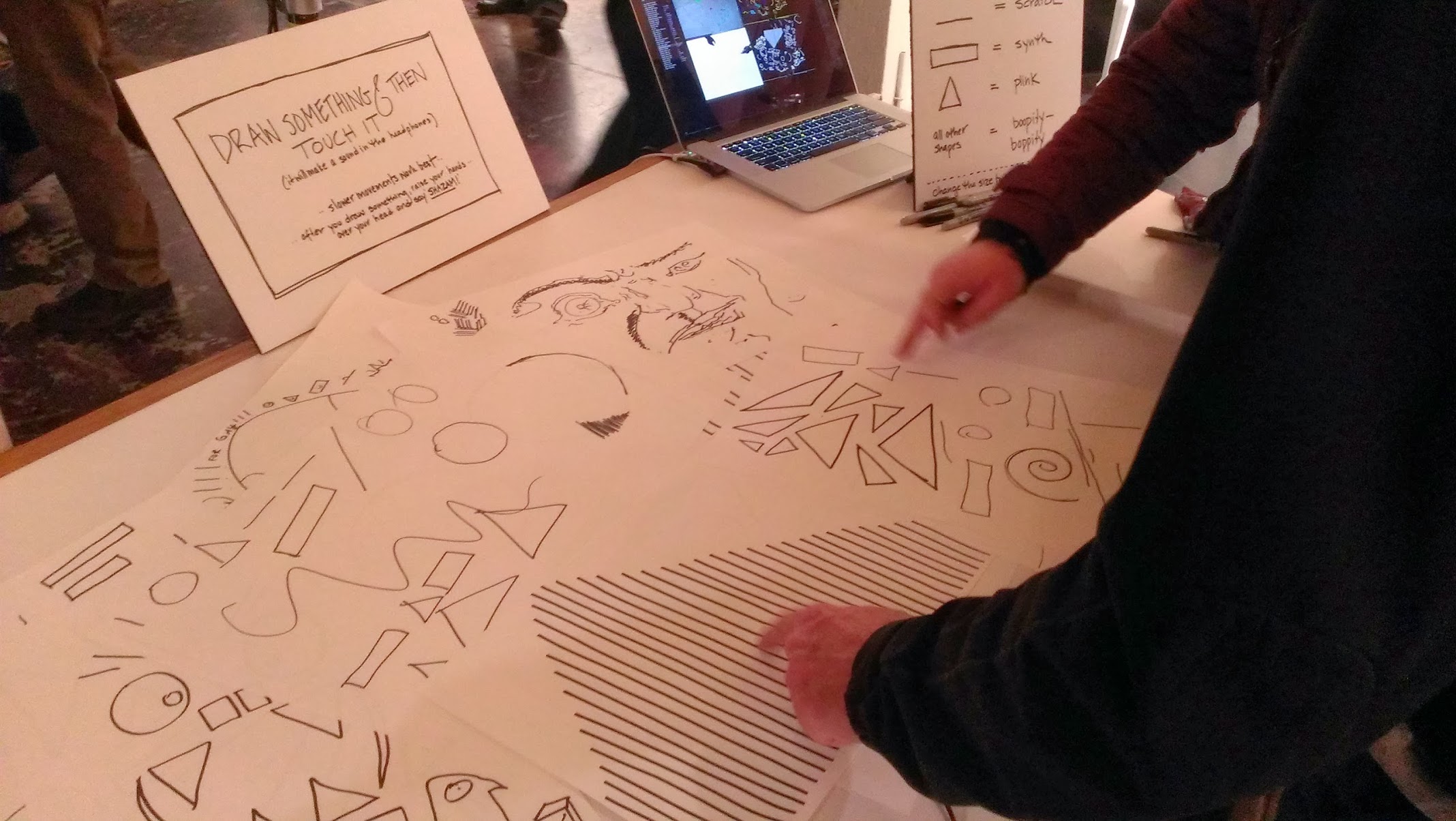

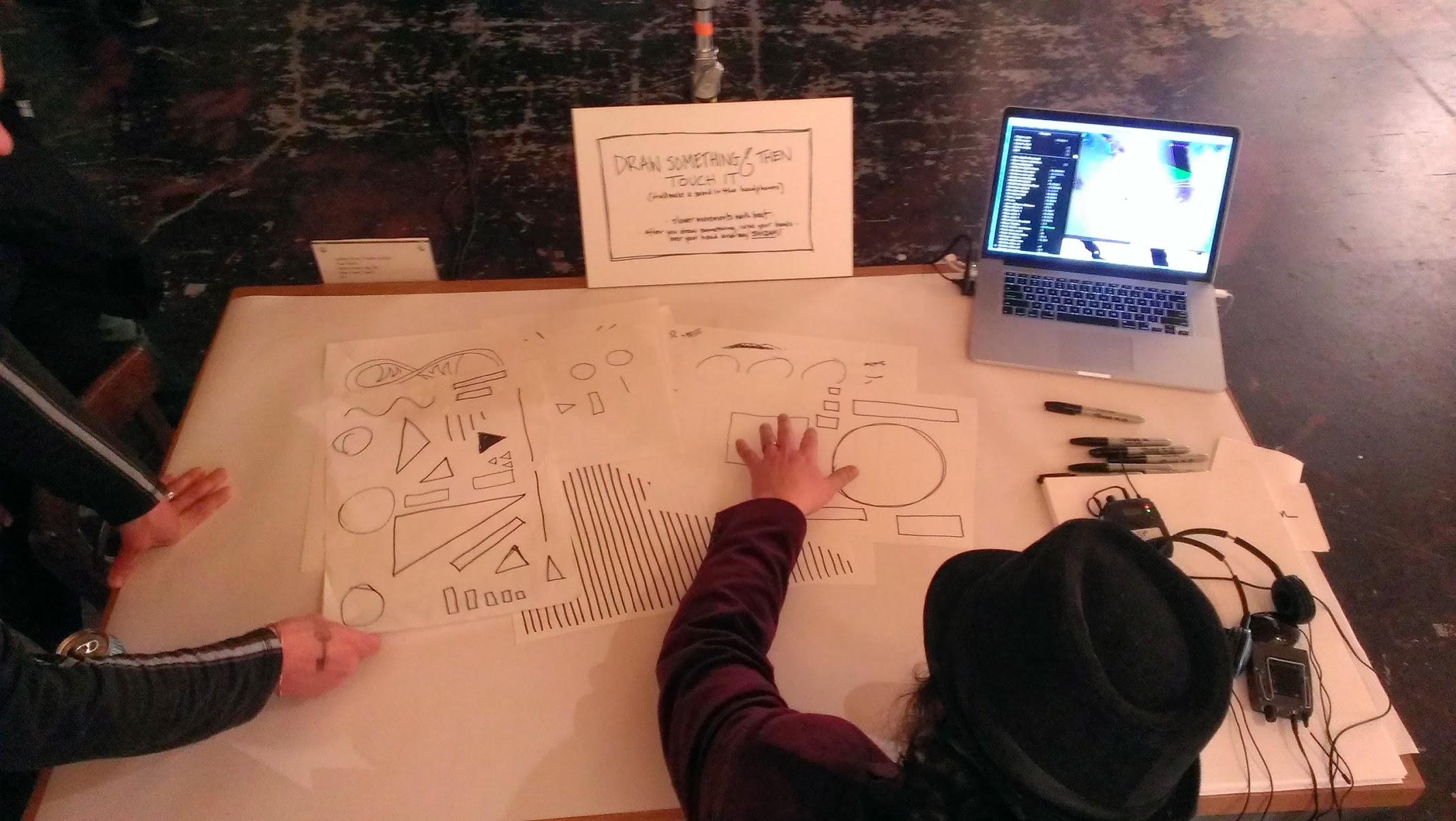

As much as possible the system is designed to be simple and fun to jump into and yet afford users a sandbox to play and explore both the visual and audial. So for example, a line drawn on a piece of paper would, as you might expect, become a string on a guitar. And a rectangle would, as you might expect, become a piano key.

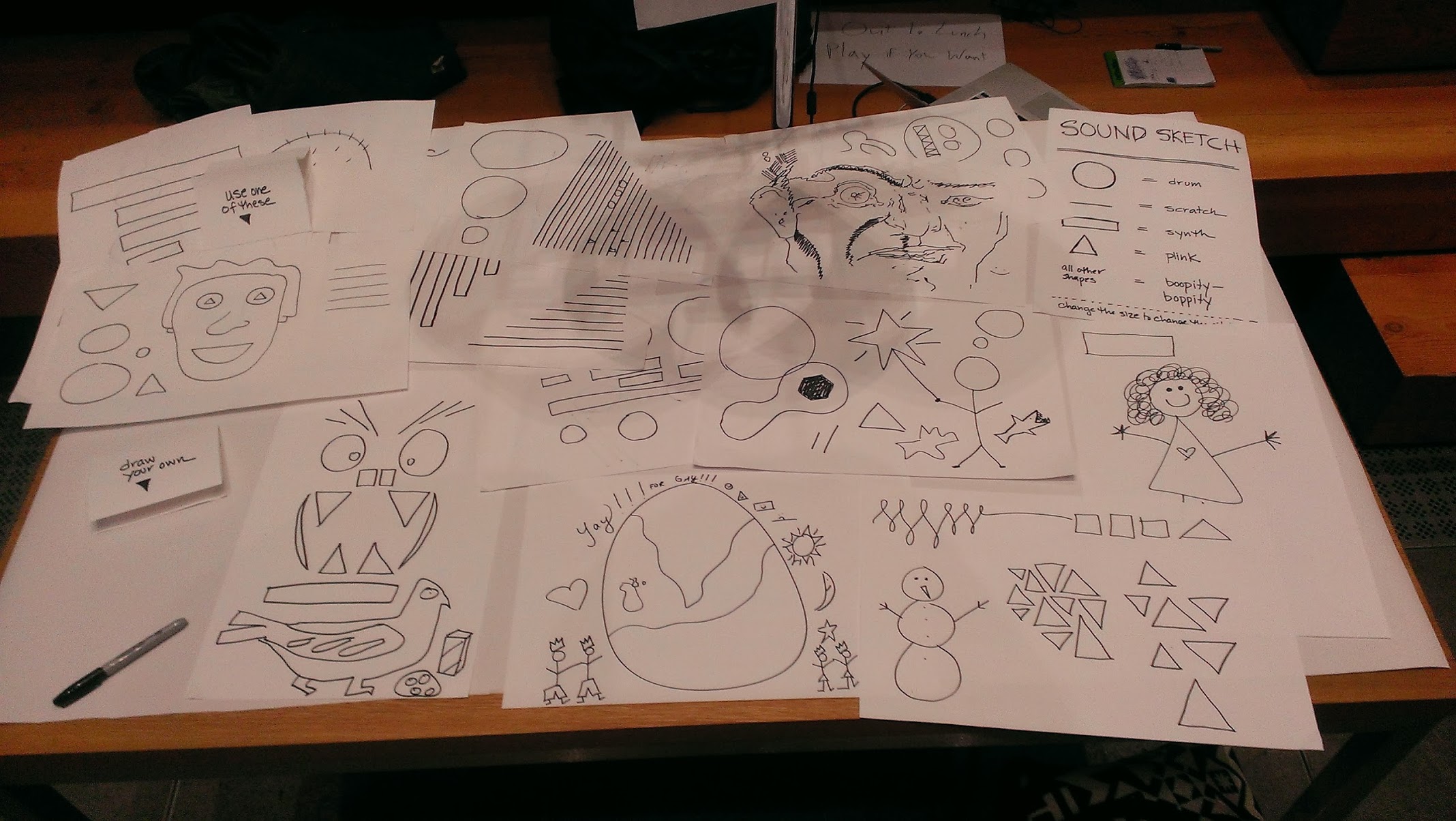

These provide good building blocks. But at the same time, there is some open ended randomness associated with images of certain sizes and shapes so that people are surprised by the sounds that might come out of their creations and encouraged to draw all sorts of weird things.

After watching people interact and play with soundsketch for over three years now, I can say that these two major goals have been borne out. As soon as people begin tinkering they are delighted to see pen and paper begin doing things pen and paper don't normally do. But they also instantly grasp the basic rules for drawing their own musical instrument. At the same time though, they often draw and strum their ways into pretty funky territory. They explore.

If you'd like to talk in depth about the design or engineering of any part of soundsketch just let me know! Briefly, the first version ran on my 2012 Mac Book Air and used Processing and Java; the second version ran on a 2014 Mac Book Pro and used C++ and the Cinder creative coding framework; and the third version ran on two 2015 Mac Pros and used Clojure, C++, Processing, Cinder, and Ableton Live. All versions used OpenCV.

In my spare time, I'm working on a related but new concept that is slightly more mobile and social!