needybot

needybot is a robot that needs help to do anything. To get to where it's going; to meet new people; to figure out what's funny; to survive. And in this sense, it's the most human robot ever.

The Idea

It's an idea that came to me when I was wandering around Wieden+Kennedy, daydreaming about how I could get a mobile robot to be able to go up and down on the elevator by itself.

Dan Wieden spending some quality time with needy.

Unfortunately, that's a really hard engineering problem. The robot needs to:

- navigate to the elevator

- push the elevator button

- get into the elevator before it closes while avoiding people

- push another elevator button

- detect when it's on the right floor

- get out of the elevator again

And that's even just in the most general, high level terms. All of that requires a whole lot of coordination between the robot's sensors, wheels, and button pusher arm. Really, really complicated stuff.

But what if the robot didn't have to solve all of those problems itself? What if it could lean on humans instead? It could hangout next to the elevator and when a human passes, it could just call out for help with pushing the button and getting into the elevator. Suddenly, my job as an engineer is ridiculously simpler and these complicated engineering problems are transformed into less complicated social problems.

There's something incredibly fascinating and deep here. This isn't just about solving engineering problems anymore. We're asking humans to help a robot. We're asking them to be curious about and empathize with a machine that, in the media, is better than them at everything. It's taking their jobs, beating them in games, murdering them, and destroying the world. It's scary.

But ironically, the core of this particular machine is perhaps the most human thing there is: empathy. A human's ability to do much of anything --to eat, to learn, to have success in life generally-- depends largely on its ability to connect with people and to plug into the human network. And now, with needybot, this is starting to be true for robots too. Just like anybody, needy's survival depends on his ability to connect with people and for them to care about him.

It's a prescient cultural statement. And it turns the history of Artificial Intelligence, a field that started in the 1950's, on its head.

But as i was kicking many of these ideas around, I would emphatically tell my co-workers about it. About a robot that needs help and about some of the "needy scenarios" that the robot could be in. And every time people's eyes lit up. Every time, people seemed to get excited about something very interesting in this idea. By the time I told my boss about it and he asked me if I was interested in making it a major project for our department, most of my teammates already knew all about this thing I called needybot and wanted to help make it real!

So how do we engineer and design an organism that people care about? How do we build a machine that actually needs help and is able to ask for it? And how do we create an experience that at every point effectively communicates some of these grand human ideas about empathy, vulnerability, friendship, and self-actualization?

Essentially, how do we use robots as a medium?

Concept and Design

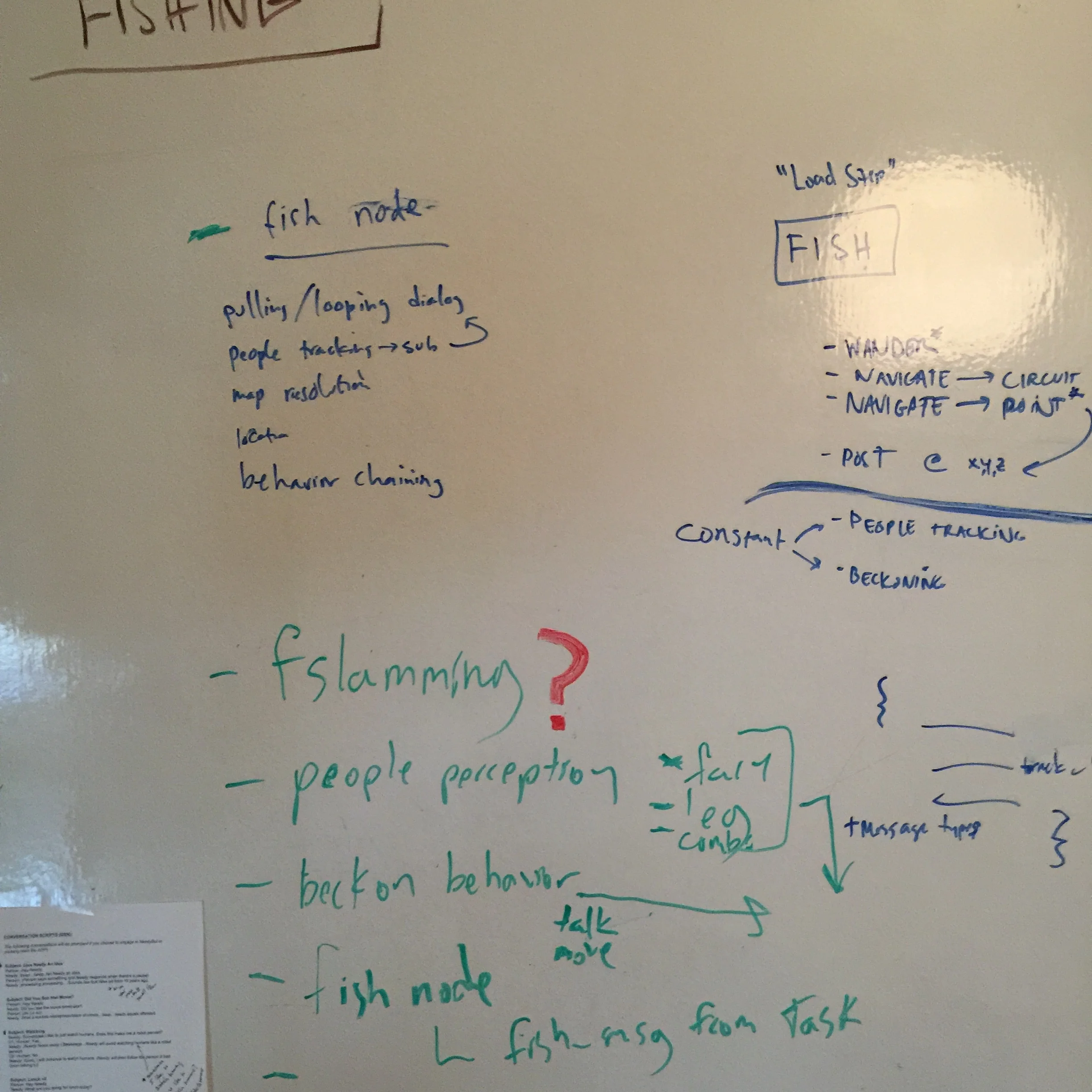

This isn't something anyone has done much of so we started with design sprints to focus the idea on an experience that everyone bought into. I briefed a handful of designers and developers on my idea, gave an overview of the field of social robotics (mostly via Cynthia Breazeal's work), and introduced some of the tools we would likely use such as Robot Operating System and Turtlebot 2.

After that initial briefing, we had a number of addition design sessions and sprints, all focused on many of the different work streams required to make needy whole. Topics included figuring out what the one sentence was that described the intent behind the idea, figuring out needy's voice as a character, exploring different interaction scenarios and activities for needy, and breaking down needy's different communication strategies.

Through all of this I came to realize that a great lens to look at needybot with is as a game. He's not in a virtual world and it doesnt involve shooting anything or collecting coins. But thinking about how you make an interactive robot is very much the same as figuring out how you make a game. And many of the conceptual and technical tools apply, and are extremely helpful, for both.

For example, what are the interaction mechnanics for needy? What can needybot actually do and how can he communicate? How do we connect engineering a robot to interesting design that people actually understand and are able to interact with? I especially love the idea of an interaction mechanic. It ties together the empathy required for thoughtful and effective design with the engineering required for complex functionality.

We arrived at several core interaction mechanics for needy:

- touching needy's face (a tablet)

- needy doing facial recognition

- needy following people around

- needy being able to say prerecorded things

- and needy navigating around the building.

With these core engineering problems solvable and nailed down, we began to be able to see how to design a living organism. One that is intelligible to people. And one that people might be able to care about and take action for.

I was central in this part of interaction design for needy; figuring out how to connect robotics technologies to interaction mechanics that people can actually understand and interact with. And as we were always racing to code and build as we designed, my goal was to always and as much as possible put needy in front of people. I did this informally very often, observing and interviewing with the goal of finding pain points and looking for opportunities to take back to the next engineering iteration. But I also organized and conducted a slightly more formal user study that involved a specific helping task that I ran about 20 people through. I tracked a few quantitative variables such as the time it took to complete the task and counting specific kinds of problems. But mostly I focused on observing interactions, asking people to narrate their thoughts in interactions, and prying into what they thought about things afterwards in a semi-structured interview.

Another key part of needy that I was less directly involved with was his physical design. A team including UX designers, motion designers, and engineers worked to design and fabricate needy's physical build to be both functional and adorable. Needy's interior was custom designed to hold all of his innards safely in place, his eyeball look and motion was designed to easily convey needy's emotions and current thoughts, and his skeleton was designed and 3d printed to hold his fuzzy, adorable exterior in place.

Engineering

For the majority of the project, I worked with three other engineers to build needybot. One focused on the tablet that is needy's face which involved handling communications from needy's robotics control software as well as face recognition and face animations. And three of us worked on needy's core software and hardware.

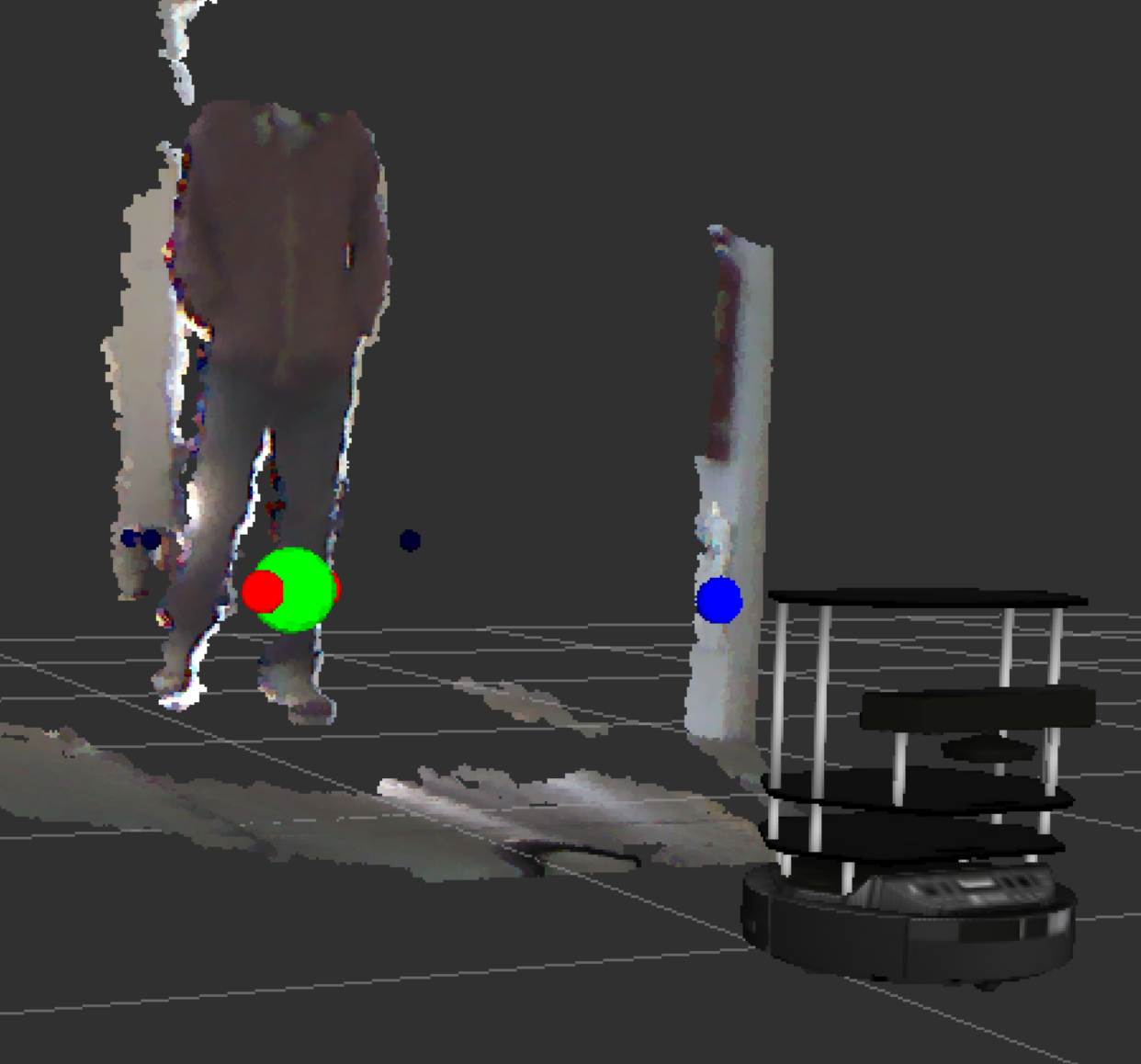

This mostly involved using Python and the Robot Operating System to manage needy's perception, controls, and interactions. We used the Turtlebot 2 as needy's platform so at his core he is a small mobile base that can turn in place, and has various infrared floor and motion sensors, as well as a 3d depth sensor. We also ended up attaching a heat sensor with a raspberry pi when we realized that needy's follow behavior wasn't quite as good as we wanted. Other than that, needy's core software is a series of distributed, event-based nodes that process information (such as incoming sensor data, messages coming from the ipad, or needy's current state) and pass along the results to whatever might need it whether that's another node or some system external to needy.

As always, if you'd like to discuss any of the engineering or design details in depth please don't hesitate to ask anything. Needybot should be released by April 2016. By then there will be much more available in the way of polished videos and information about needybot and what people are doing with him as he lives his life alongside the inhabitants of Wieden+Kennedy!